This summer, I have been working on an experimental React concurrent mode profiler as part of the inaugural batch of the MLH Fellowship. One of the biggest engineering challenges I had was to optimize our canvas rendering.

This was no small task. A single 10-second profile can have over 100,000 flame chart stack frames, along with hundreds of React data elements. Since users can interact with their profiles by hovering, panning, and zooming into the data, our rendering code had to be efficient to avoid a janky experience.

The "before" state

Our project was forked from an early prototype that Brian Vaughn of the React core team built in late 2019. Brian's prototype already included these optimizations:

- It did not use any abstraction layers. During React's commit phase, a long function would loop through the data and draw to the canvas. We were thus confident that there were no inefficiencies apart from those in our code.

- Elements that are offscreen or narrower than 1 pixel were not rendered. This limited the number of calls to the slow Canvas API.

Unfortunately, these optimizations weren't enough. When a significant number of elements were on screen, interactions stuttered noticeably as frame rates dropped to 20 FPS or less. Although the app was still very usable, this experience was less than ideal. We wanted to fix it.

In this post, I’ll bring you through my journey getting to a solution that improves our product’s UX. You can also find more information and discussions in our GitHub issue!

The problem, broken down further

Before we begin, we must first locate the biggest areas of improvement so that our solutions will have the largest impact.

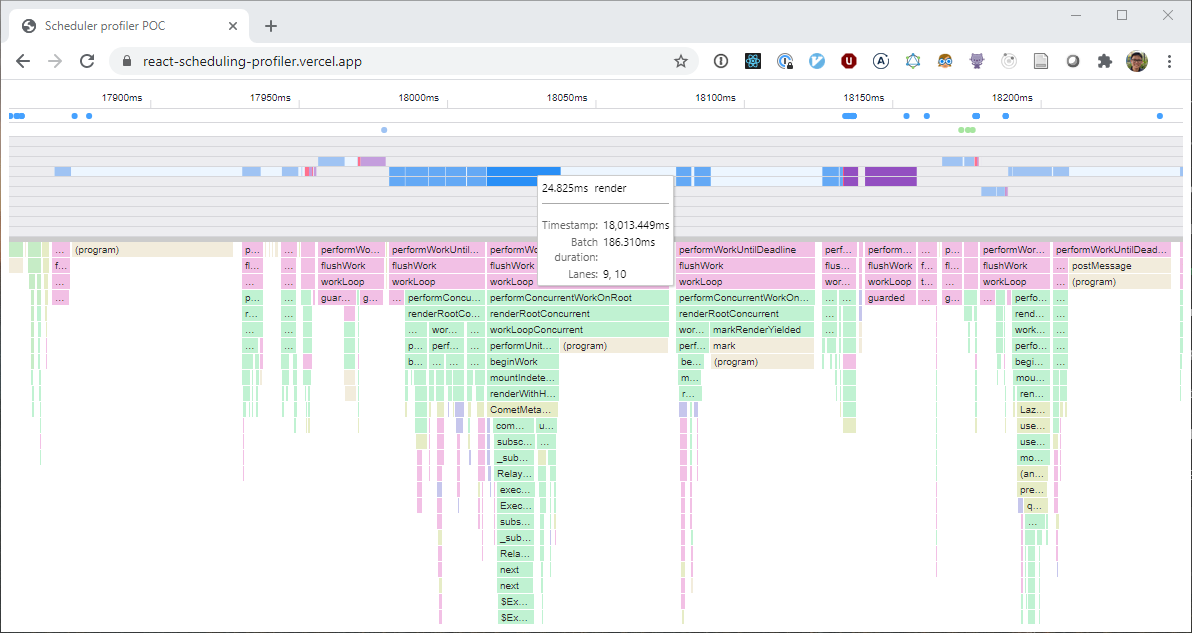

First, I turned to Chrome DevTools's performance tab to profile some interactions. The profiles told us that our flame chart rendering was the slowest part of each animation frame by far. This matched my expectations, as we had many more flame chart nodes than React data elements.

renderFlamechart took compared to the other render* functions.The profiles also provided further evidence that full-canvas renders were already very efficient. Most of the execution time was spent in the green boxes—canvas API calls.

Second, I realized it was unnecessary to render the whole canvas on every interaction. Specifically, when the user hovers over the canvas, we only need to redraw when:

- The cursor enters a flame chart frame or React event/measure: we want to show that they're hovered by changing their background color.

- The cursor exits the frame/event/measure: we want to render the normal background color for the exited element.

Because profiles often have tiny elements, they may only appear for a fraction of a second as a user skims their cursor over them. If our profiler has a low frame rate on hover, they may not even appear at all! This made it essential to optimize hovers. Everyone hunting for that one tiny function call in their profiles, you're welcome.

Solutions immediately ruled out

- Further decreasing the number of elements rendered. This was not possible. In fact, we'd want to render more flame chart frames, so that we don't have to hide narrow ones.

- Pre-rendering things on an offscreen canvas. Unfortunately, this is only helpful if we can predict what the next frame will be. Since we are rendering in response to user input, this was also not possible.

Solutions tried but ruled out

Using ReactART

Interestingly, React has an official renderer that renders to HTML canvasses: ReactART. Since React will only commit changes and leave everything else untouched, this sounded exactly like the droid we were looking for!

Sadly, there were significant problems with ReactART:

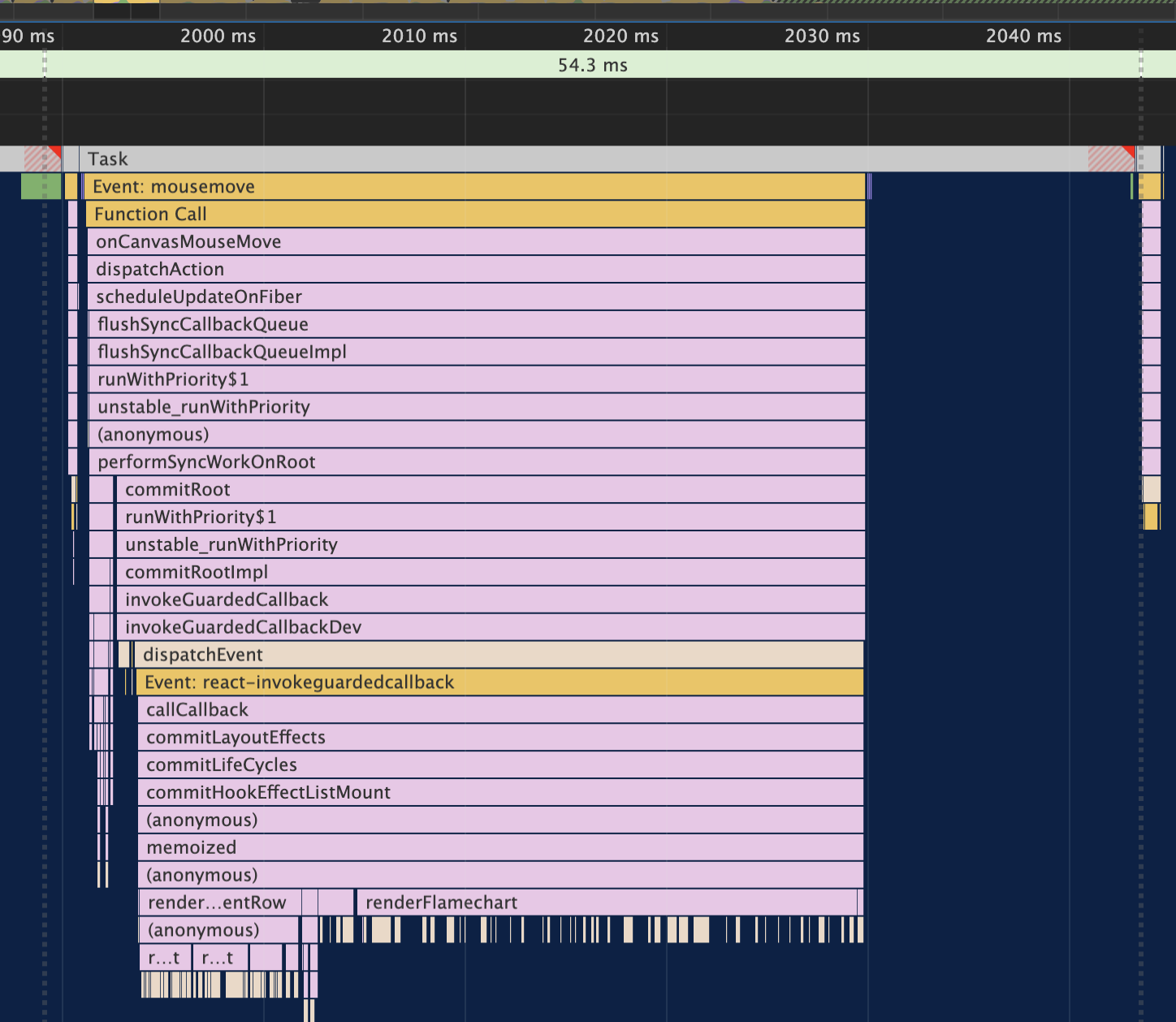

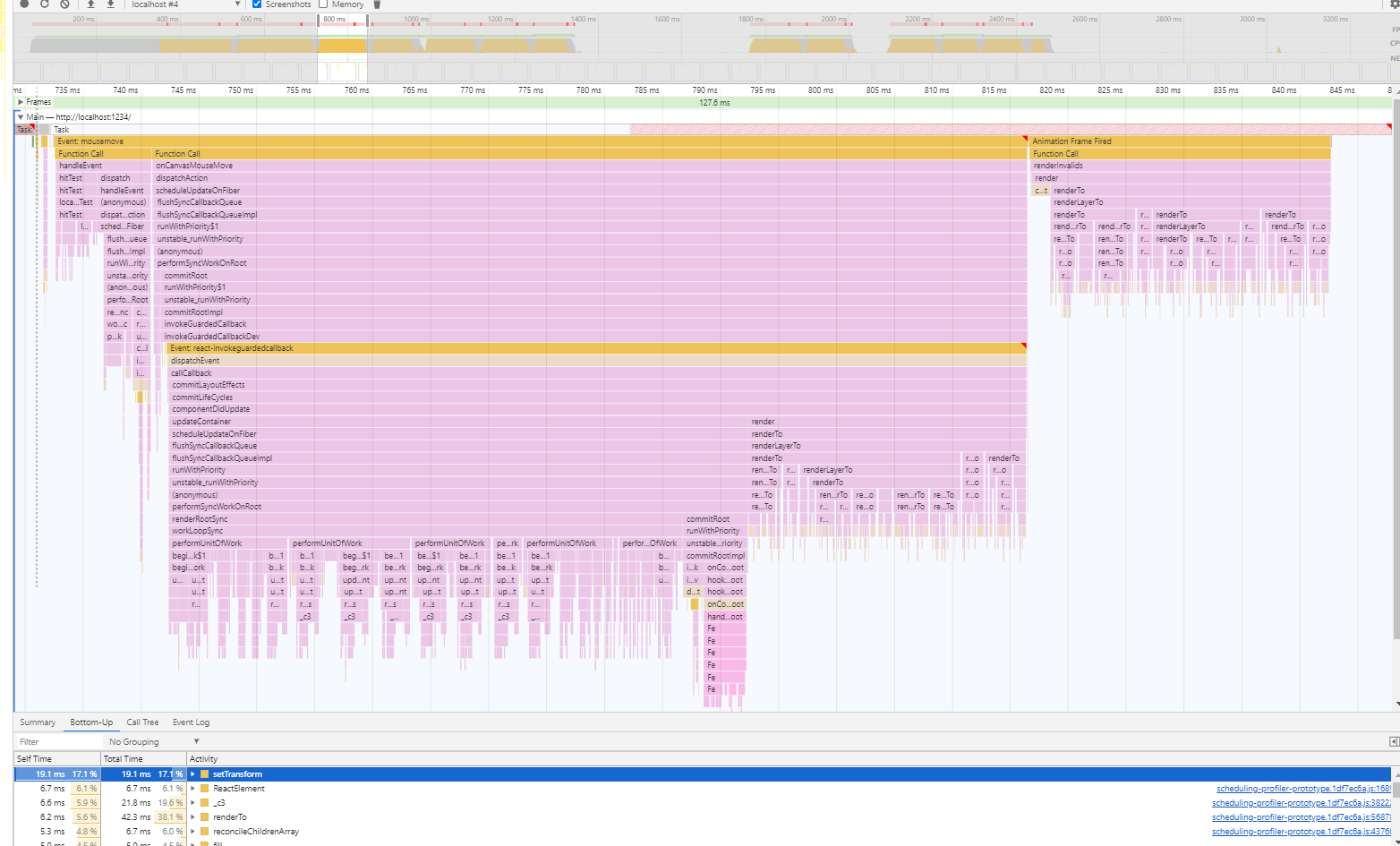

- The biggest issue was that React was not performant enough to work with the number of elements we needed. In the screenshot below, you can see that React spends a lot of time in the reconciliation phase. We could not afford this overhead in our profiler.

- Our profiler renders many rectangles. As luck would have it, ReactART's constructed rectangles by adding straight line segments to a path—a slow process. Even though I forked ReactART and its underlying Art library to optimize this, performance profiles still showed that a lot of time was somehow spent in

setTransformcalls. - ReactART is not very well maintained. I didn't think this was a big concern, since our profiler will be part of the React project too and would thus provide an incentive to maintain ReactART.

So, although it would have been nice to use ReactART (and it has a nice API too!), I ultimately decided against it as there were too many problems.

Using WebGL with Pixi.js and Two.js

A friend (who works on the amazing Mobbin) suggested that I try using WebGL next. Because WebGL's native APIs are powerful and equally hard to use, it is only a viable option if used with an abstraction layer.

He recommended that I try the Pixi.js library. Unfortunately, performance got even worse than before, even with Brian's optimizations present. I also tried Two.js with similar results.

Because I was not familiar with WebGL or Pixi.js, continuing with it could delay or even derail the project. I decided that pursuing a WebGL approach would be too risky.

Later, I found out that Pixi.js can handle a hundred thousand sprites at 20+ FPS. Plus, it looks like both Chrome DevTools and Speedscope use WebGL to render their flame graphs. I suspect I did not use WebGL as effectively as I could. I should try this again!

A view framework?

When I was thinking about solutions to this problem, I had the wild idea to implement UIKit's UIViews in JavaScript. Apple recommends the model-view-controller pattern in iOS apps, and UIViews are the foundation of the view layer. In a native iOS app, literally everything you see will be an instance of a UIView or one of its subclasses.

Having worked on iOS apps starting about 11 years ago, I was familiar with the view system on iOS. Here's what I liked about its API:

UIViewsefficiently drew areas that needed drawing with adrawRect:method.UIViewshave aneedsDisplayflag that is set to true when the view's contents are invalidated. This allows UIKit to only draw views that need drawing.- The

UIViewclass can be subclassed to implement custom drawing and handle interactions. - Behaviors can be composed by nesting

UIViews. This is howUIScrollView,UIStackViewand many other views work. - The

CGContextAPI formed the basis for JavaScript Canvas APIs. This gave me confidence that aUIView-like API will work well with JS's Canvas.

If we had such a view framework in JavaScript, interactions that don't need the whole canvas to be redrawn will be easy to implement in a performant way. This means that the view framework will only optimize hover interactions. Scroll and zoom performance will remain roughly unchanged in the current app.

A view framework will also resolve other issues in our profiler:

- Our interaction code was independent from the rendering code. Interaction handling code was forced to recalculate the layout of the elements onscreen. This was annoying as we had to implement any layout changes twice. More importantly, subtle differences in layout calculations resulted in unexpected bugs in the UI.

- Brian's optimizations were duplicated in the multiple render functions. Ideally, any optimizations should be applied automatically so that our rendering is optimal by default.

Because of my experience with UIKit, I was confident that a view framework will address these issues.

The big downside is that we have to implement and maintain a framework. All frameworks incur a certain cost for the benefits they provide, and this was no different. I won't lie, I was very hesitant to do this. A view framework like this is also not common in the JavaScript world, and would be an extra barrier for any potential contributor to overcome.

You could also argue that such an API is antiquated, and declarative APIs like React are the future. In fact, one of React's predecessors at Facebook was a similar framework called UIComponent (see Lee Byron's "Let's Program Like It's 1999" talk).

However, after having tried everything mentioned in previous sections, I decided that this was the right approach to solve all our biggest and deepest problems. It was easy to implement and had a procedural flow, exactly what we want in a thin, performant, and understandable abstraction.

Join me in part 2 where I delve deeper into the design and implementation of the view framework!